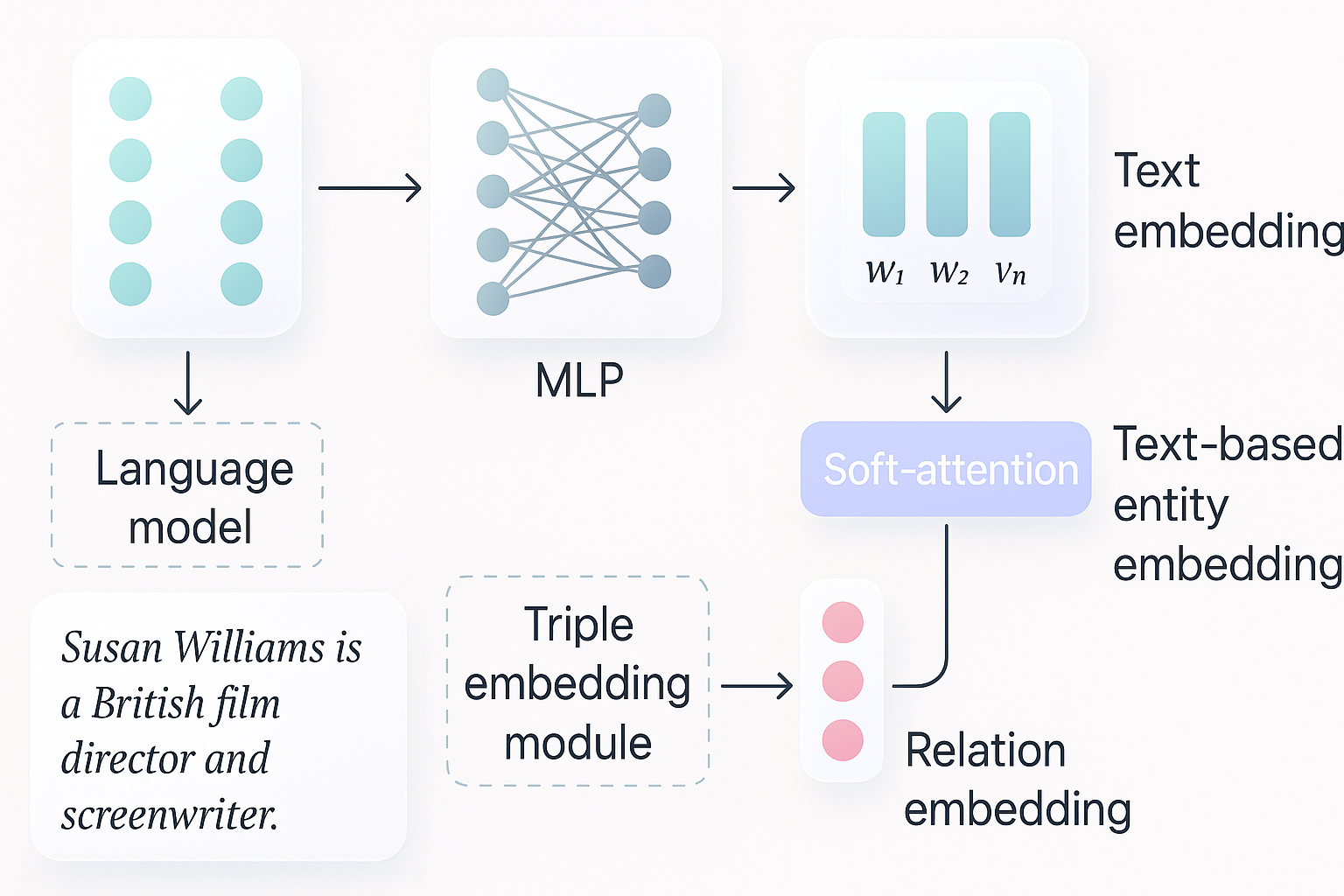

This step is about converting each chunk into a vector embedding – a numerical representation that captures the semantic content of the text. These embeddings will later be indexed for similarity search. Choosing the right embedding model (and strategy) is crucial for accurate retrieval.

There are a few options for generating embeddings in 2025, each with trade-offs:

- Use a pre-trained embedding model (off-the-shelf)

Many open-source models are available that produce high-quality sentence or paragraph embeddings. For example, the Databricks vector search supports models like E5, BGE, InstructorXL, etc., which are state-of-the-art general-purpose embeddings. These models are often trained on massive datasets to capture semantic similarity. Using an open-source model (from HuggingFace Hub) has the benefit of being free and private (you run it on your own infrastructure), and you can fine-tune it if needed. - Fine-tune or customize an embedding model

For domain-specific data, fine-tuning a model on in-domain text can improve retrieval. NVIDIA NeMo provides tools to train or fine-tune embeddings (for example, using its Megatron models or smaller Transformer encoders). You could also use techniques like knowledge distillation to tailor embeddings to your data. If you have labeled similar vs. dissimilar pairs (or even just a large corpus of domain text), a fine-tuned embedding model might capture nuances (e.g., legal or medical terms) better than a generic model. - Use proprietary embedding APIs

Services like OpenAI’s text-embedding APIs or Cohere’s embeddings can generate vectors from text easily. For instance, OpenAI’stext-embedding-ada-002is widely used. The advantage is you get high-quality embeddings without hosting a model, but sending data to an external API may raise privacy issues and incur significant cost at scale. For sensitive enterprise data, an OpenAI-compatible local model or a hosted instance in your VPC might be preferable. - Leverage Databricks Model Serving

In Databricks, you can deploy an embedding model as a Model Serving endpoint and then let Databricks Vector Search use it to automatically embed new data. This is a neat option – you could serve a Hugging Face model or a custom model on Databricks, and as you add documents to the Delta table, it will call that endpoint to get embeddings for each chunk.

For our pipeline, let’s illustrate generating embeddings using a Hugging Face model (off-the-shelf). We’ll assume we have our list of text chunks ready:

# Install sentence-transformers if not already installed

!pip install -q sentence-transformers

from sentence_transformers import SentenceTransformer

# Load a pre-trained embedding model (E5-large in this example)

model = SentenceTransformer('intfloat/e5-large') # embedding dimension ~1024

# Suppose chunks is a list of text chunks

embeddings = model.encode(chunks, batch_size=64, convert_to_numpy=True, normalize_embeddings=True)

print(f"Generated {len(embeddings)} embeddings of dimension {embeddings.shape[1]}.")

In this code, we use the E5 model, which is known to produce excellent embeddings for search and retrieval. We normalize embeddings (either by the model’s option or manually) so that we can use cosine similarity effectively. The output embeddings would be an array of shape (num_chunks, embedding_dim).

We should store these embeddings alongside the chunk metadata (e.g., as a column in the Delta table, or in a separate vector index structure). If using Databricks Vector Search, the platform will handle storing the embeddings in its index. If we manage it ourselves, we could store embeddings in a NumPy file or a database – but often it’s easier to use a vector database library which we’ll discuss next.

Best practices for embeddings:

- Ensure the embedding model is aligned with the retrieval task

For QA-style RAG, we want embeddings that capture topical similarity. Models like E5 and InstructorXL are trained on question-answer or instruction datasets, making them suitable. In contrast, embedding models tuned for other tasks (like STS benchmarks or paraphrase identification) usually still work for semantic search, but some might not handle very long text well. Choose a model known to work on full-sentence or paragraph inputs. - Maintain consistency

Use the same embedding model for documents and queries. This seems obvious but is worth stating – the query will be embedded with the same model so that similarity comparison is valid. (There are advanced techniques with dual-encoders or cross-encoders for re-ranking, but the baseline is one model for all.) - Batch and optimize throughput

Generating embeddings for thousands of chunks can be slow. Use batching (as in the code above) and if on GPU, ensure you use GPU acceleration. If using Databricks with GPUs (on AWS that might be p3 or A10 instances), you can installtorchwith CUDA and run the model on GPU. Libraries like SentenceTransformers or Hugging Face Accelerate can help utilize multiple GPUs if available. NVIDIA’s NeMo toolkit also offers optimized inference for their models – for example, NeMo has optimized Megatron encoders you could use. - Consider embedding size vs. accuracy trade-offs

Larger embeddings (768d, 1024d) might yield slightly better recall than smaller ones (256d) but will use more memory in the vector store and may be slower to search. With advanced algorithms, memory might not be a huge issue, but it’s something to be aware of. Some experimentation with different models is useful to see what dimensionality is truly needed for your domain.]

At the end of this step, we should have all documents represented as vectors. Now we need a system to store these vectors and enable efficient similarity search for incoming queries.