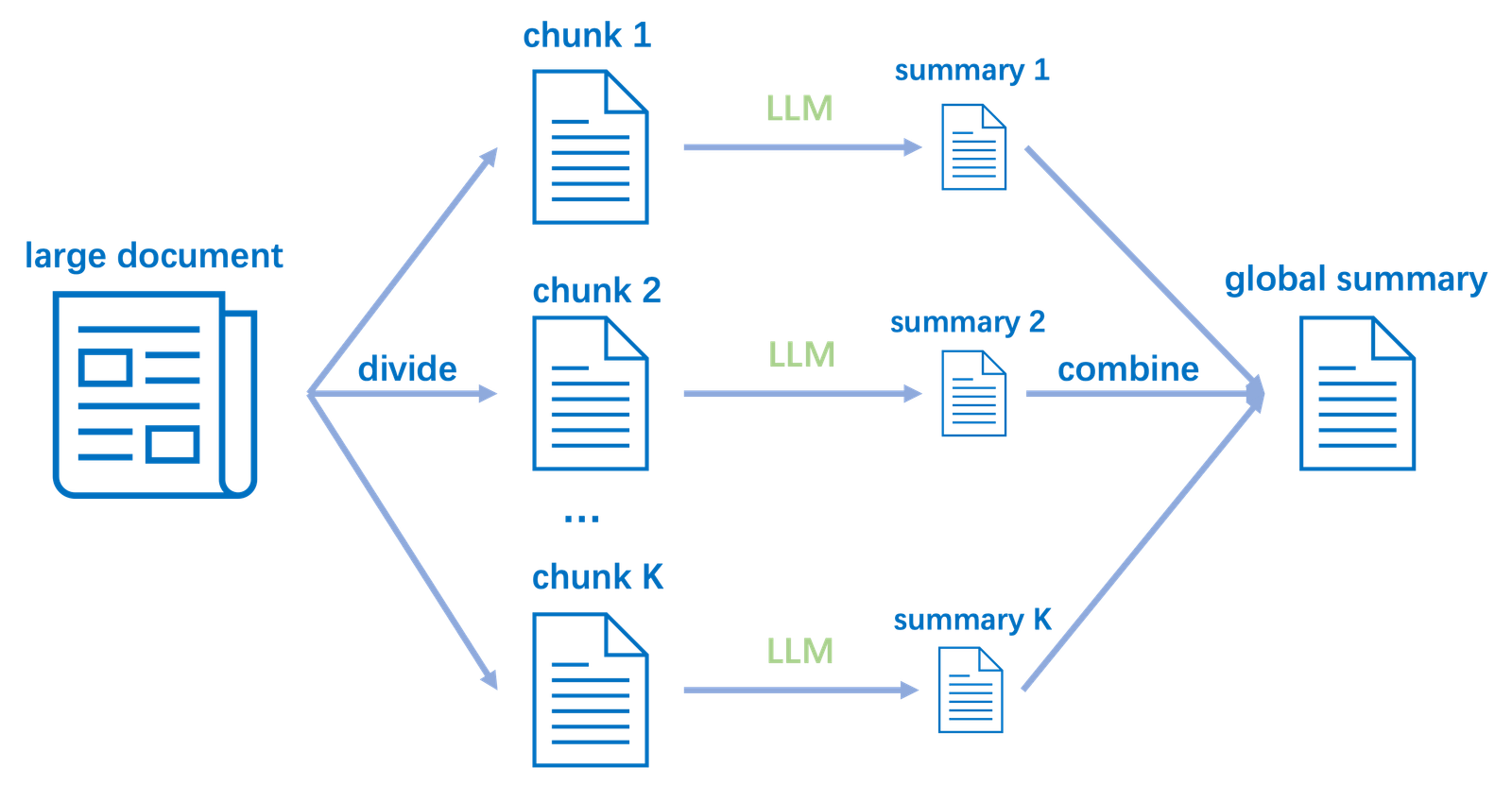

After curation, we have a collection of documents (or long text files). The next step is to split these documents into smaller chunks suitable for retrieval. Chunking is critical in RAG because retrieving an entire long document in response to a query can be inefficient or exceed the LLM’s context window. Instead, we break documents into semantically coherent passages (paragraphs, sections, sentences) that can act as standalone pieces of information.

As noted in Databricks’ documentation, before storing vectors “raw… data from various sources need to be cleaned, processed (parsed/chunked), and embedded with an AI model”. Effective chunking ensures that each piece of text is just the right size to capture a complete idea while not overwhelming the LLM. Here are guidelines and best practices for chunking:

- Aim for chunks that fit the LLM context

Retrieved chunks plus the user’s query will be concatenated as input to the LLM. If your LLM’s context length is, say, 4096 tokens, you might target each chunk to be ~200-300 tokens so that even if you include 3-5 top chunks plus the question and some prompt text, you stay within limits. For newer 8k or 16k-token models, chunks can be larger, but smaller chunks generally improve retrieval granularity. - Split on natural boundaries

Use the document structure to guide chunking – e.g., paragraphs, bullet points, or section headings should usually stay together. You can also leverage sentence boundaries. This keeps each chunk semantically self-contained (the model can read it without needing text that was cut off). - Use overlap to avoid lost context

Adding a slight overlap between consecutive chunks (e.g., repeating a sentence or two from the end of one chunk at the start of the next) can help the retriever not miss relevant info that lies on a boundary. This way, if a query hits a point right at the edge of a chunk, there’s a higher chance that at least one chunk contains the needed context. - Retain metadata for each chunk

When splitting, make sure to tag each chunk with identifiers of the source document, page number, section title, etc. This metadata is useful later for two reasons: (1) you can surface source attributions in the answer (e.g., “According to Document A…”), and (2) you can filter or rank results by source (for example, prefer chunks from a more recent document if duplicates exist).

In practice, chunking can be done using simple scripts or with libraries. For example, we might use a Python function to split text by a max length:

def chunk_text(text, max_tokens=256):

"""Split text into chunks of approximately max_tokens (simple word-based splitter)."""

words = text.split()

chunk = []

chunks = []

for word in words:

chunk.append(word)

# Approximate token count by word count for simplicity

if len(chunk) >= max_tokens:

chunks.append(" ".join(chunk))

chunk = []

if chunk:

chunks.append(" ".join(chunk))

return chunks

# Example usage:

text = open("example_doc.txt").read()

paragraphs = text.split("\n\n") # split by blank lines as a first step

chunks = []

for para in paragraphs:

chunks.extend(chunk_text(para, max_tokens=200))

print(f"Created {len(chunks)} chunks from the document.")In this example, we first split by paragraphs (assuming blank lines separate them), then further split long paragraphs into pieces of ~200 words. In a real scenario, you might use a tokenizer (from Hugging Face Transformers, for instance) to count tokens more precisely rather than words, and you might preserve sentence boundaries when splitting. There are also dedicated tools (like Langchain’s RecursiveCharacterTextSplitter) that implement these strategies (e.g., split by paragraphs, then if a chunk is still too large, split by sentences, etc., with overlaps).

Why Chunking Matters

Smaller, well-formed chunks improve retrieval precision – the vector search can pinpoint the exact passage that answers the question, rather than returning an entire document where the answer is buried. It also helps the LLM focus only on relevant text, which can improve generation quality and reduce the chance of the model drifting off-topic. Without chunking, you might hit context length limits or retrieve a lot of irrelevant text (if a document is retrieved for one small relevant snippet).

One more preprocessing step to consider is normalizing text in the chunks: for example, lowercasing (for some embedding models this might not matter), removing excessive whitespace, or correcting obvious OCR errors in text (if you parsed PDFs). Ensure the chunks are in a reasonably consistent format and encoding. This was partially done in the curation stage (e.g., NeMo Curator’s Unicode fixes), but it’s good to double-check before embedding.

Finally, store the resulting chunks back to the Delta Lake as a separate table (for example, a documents_chunks Delta table with each chunk and its metadata). In Databricks, you might take the original curated table and use a UDF or Pandas UDF to apply the chunking function, then explode the chunks into a new table. This chunk table will be the one we use for embedding generation.